Large Language Models: Where They Shine and Where They Struggle

Retrieval-Augmented Generation packs quite a punch for anyone looking to make large language models more accurate and surprisingly clear. Large language models, or LLMs, are everywhere. They get some things amazingly right and other things very interestingly wrong. These models generate text in response to a user’s prompt. Sometimes, though, they can have undesirable behavior. A classic example pops up when someone asks, “In our solar system, what planet has the most moons?” Without checking, the answer might be Jupiter with 88 moons, based on old info. There’s no source, and the answer is out of date. These are two challenges with LLMs: no source and outdated information. If a reputable source like NASA is checked, the answer changes. Saturn now has the most moons, with 146. This keeps changing as scientists discover more. Grounding answers in current sources makes them more believable and less likely to be made up.

Why Retrieval-Augmented Generation?

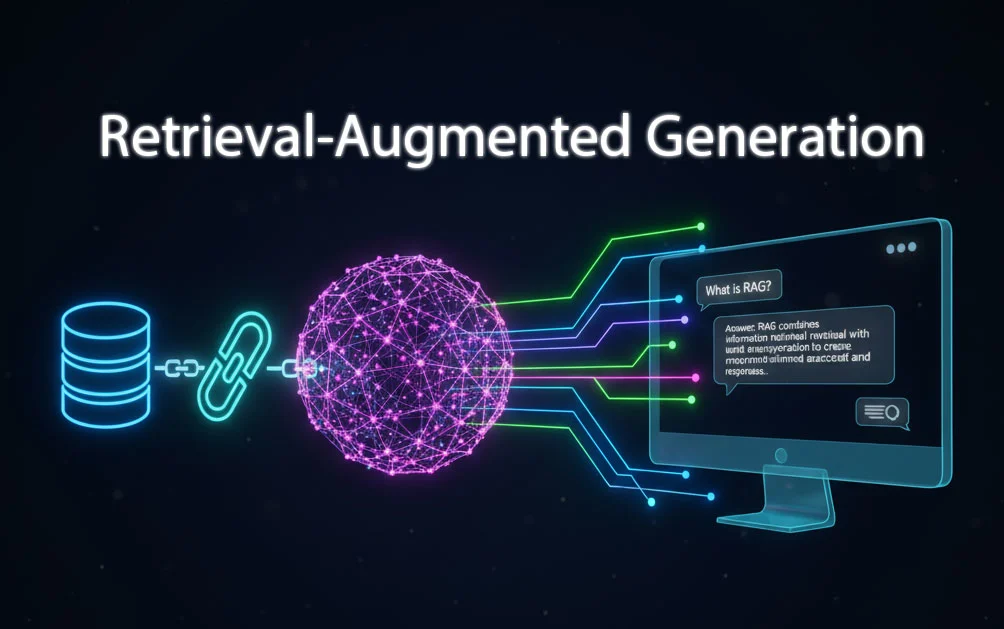

So, what happens when a large language model gets this question? The model, trained on past data, might confidently say Jupiter. The answer is wrong, but the model doesn’t know. That’s where retrieval-augmented generation, or RAG, steps in. RAG means the model doesn’t just rely on what it knows. It checks a content store, which could be the internet or a private document collection. The LLM asks, “Can you retrieve info that’s relevant to this question?” With this retrieval-augmented answer, the model can now say Saturn, not Jupiter.

How RAG Works?

Here’s how it works. The user asks a question. The generative model, instead of answering right away, gets told to first go and retrieve relevant content. The model combines that with the question and only then generates a response. The prompt now has three parts: an instruction, the retrieved content, and the question. The answer can now provide evidence for why it’s correct. RAG helps with the two big LLM challenges. First, staying up to date. There’s no need to retrain the model every time new info comes out. Just update the data store. Next time someone asks, the latest info gets retrieved. Second, sourcing. The model is instructed to pay attention to primary sources before answering, making it less likely to hallucinate or leak data. It can even say, “I don’t know,” if there’s no reliable answer in the data store. That’s a huge improvement over making up something that sounds right but isn’t. There’s a catch, though. If the retriever doesn’t pull up the best info, the model might miss an answer that’s actually possible. That’s why a lot of work goes into improving both the retriever and the generative model, so the final answer is the best it can be.

What Makes RAG Special?

LLMs like ChatGPT can answer almost any question, but when it comes to giving authoritative answers with sources, things get tricky. These models answer based on their training data, which isn’t always up to date. They don’t pull answers from real-time sources. Retrieval-Augmented Generation, or RAG, fixes this issue. RAG is a technique that brings in facts from external sources, making generative AI models more accurate and reliable. It solves the accuracy and reliability issues of LLMs. When it’s time to generate something current or specific, RAG becomes even more useful.

The Story Behind RAG

RAG was developed by a team led by Patrick Lewis, who coined the name. The goal was to link generative AI services to external resources, especially those full of the latest technical details. In 2020 Lewis and his colleagues wrote a paper calling RAG a general-purpose fine-tuning recipe. It lets almost any LLM connect to practically any external resource. RAG gives AI models the ability to cite sources, like footnotes in a research paper. Users can check any claim themselves, which builds trust. A great example is Perplexity AI, an answer machine model that uses RAG and LLMs together. Users ask anything, and the model gives answers with sources, so the info can be cross-checked.

How RAG Actually Works in Practice?

Because the model already has a source, the chances of it making a wild guess drop a lot. The model gets proper context and hallucinates less. Another big advantage is that RAG is relatively easy to implement. Developers can set it up in just a few lines of code. That makes it faster and less expensive than fine-tuning, and users can hot swap new sources on the go, meaning models or sources can be changed without shutting down the system. How does RAG work? First, it grabs data from a database, document, website, or API. Then it splits that data into small chunks and converts them into vector representations or embeddings. These embeddings are stored in a specialized vector database. When a user asks a question, that query is also turned into an embedding. The system compares the query embedding with the database and retrieves the top relevant chunks. These are merged with the original query to create an augmented context. This augmented context is fed to an LLM like GPT-4 or Claude 3. The generated response is the final output, providing a relevant and information rich answer. RAG can be used with LLMs or on its own. With LLMs responses are more fluent and context rich because the model gets up to date info from external sources.

Where RAG Opens Up New Possibilities?

With RAG, it’s possible to interact with practically any data collection, opening up tons of new possibilities. For example, a generative AI model linked to a medical index could be a helpful assistant for doctors or nurses. The same idea works for financial analysts connected to market data. Any business can turn technical manuals, policy documents, videos or logs into knowledge bases. Retrieval Augmented Generation makes large language models more accurate, reliable, and surprisingly clear. It helps models avoid wild guesses and keeps answers grounded in real sources. That’s why it packs quite a punch for anyone looking to get the most out of AI.