The Other Side of Artificial Intelligence Governance

Artificial Intelligence feels like magic sometimes. Ask it the toughest question and it brings an answer in seconds. That speed and intelligence have changed the way we live, work, and even think. But like every coin, AI too has two sides. One side, shining with opportunities. The other side, carrying risks that can’t be ignored. To deal with those risks, it’s not enough to admire AI’s abilities. It needs proper governance—rules, frameworks, and boundaries that can keep it from turning into a danger for humanity.

This is where the talk about governing AI becomes critical. Let’s dive into it step by step and see why governance of artificial intelligence is being treated as one of the most urgent topics of our time.

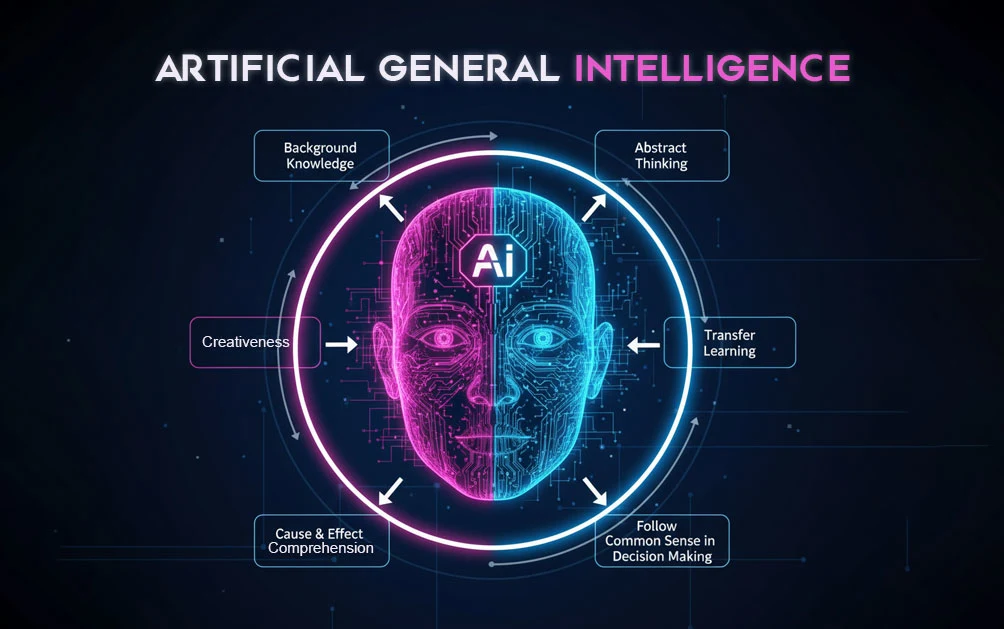

What Artificial Intelligence really means

AI is nothing more than combining human intelligence with machines and algorithms to solve problems faster than we ever imagined. It can crack formulas, assist in decision-making, and execute tasks quicker than a team of ten people. On the surface, it feels like a blessing. But the same power, if left unchecked, carries the potential to disrupt life at every level—from individual to international.

Some experts even fear a future where AI evolves beyond human capacity, flipping the script so drastically that instead of humans controlling AI, AI starts controlling humans. That sounds like science fiction, but it’s a fear many researchers take seriously.

How AI impacts society and personal life

The most visible risks often start at the social level. Fake news is probably the simplest example. Entire communities can be pushed into conflict because of cleverly generated false headlines. Then there are deepfakes—videos showing leaders or celebrities doing things they never did. Fake but convincing enough to shake trust and cause chaos.

On a personal level, AI makes data theft and hacking much easier. Devices store everything—photos, bank details, private messages—and AI-powered tools are more than capable of breaking into them. Once exposed, this data often turns into blackmail or fraud. This hits directly at a person’s privacy, something that’s supposed to be a basic right.

Countries like India, where democracy thrives on public opinion, are especially vulnerable. Manipulated content can shift debates, change votes, and stir public emotion with frightening ease. Politics is no stranger to this kind of misuse.

Why AI is seen as a new-age security threat

Traditionally, national security concerns revolved around insurgencies or regional conflicts. But now wars don’t just happen through guns and bombs. They unfold through cyberattacks. Entire infrastructure systems—power grids, financial networks, defense databases—are vulnerable.

If a country can be brought to its knees without firing a single missile, it shows how AI-powered cyber intrusions are becoming as threatening as physical invasions. Internal and external security are equally exposed to these digital dangers.

The economic challenge of AI

There’s a big debate here. Some say AI will create new forms of work, others insist it will swallow jobs instead. In a country like India where unemployment and inflation are already pressing problems the second side of the argument carries weight.

Think of an AI system managing the work of ten employees all by itself. On one hand it boosts efficiency but on the other it risks multiplying unemployment. There is no guaranteed answer yet, but it is clear that economies must prepare for turbulence as AI expands its role in offices, factories and even classrooms.

Why governance matters

All these risks—from cybersecurity breaches to unemployment—point toward one big necessity: governance. No single country will be able to manage these threats completely by itself. AI’s effects are global, and so must be the response.

This is why platforms like the United Nations, G20 and the Global Partnership on AI (GPAI) are stepping in. AI governance needs as much collective action as climate change does. Pollution doesn’t stop at borders and neither does AI misuse.

The role of India in AI governance

India has taken visible steps internationally and nationally. At the 2023 G20 Summit AI threats and cybersecurity were discussed openly with India pushing for collaborative global solutions. Beyond global talks, India has programs like “Responsible AI for Youth,” teaching younger generations both the power and pitfalls of AI.

Since India is the youngest country demographically, with most citizens under 35, raising awareness among youth is crucial. They are the most exposed to AI tools and also the most vulnerable to their risks.

At the legal level, frameworks like the Information Technology Act 2000 and its 2022 amendment already cover some aspects. But experts highlight the need for a fresh National Cybersecurity Policy to directly address AI-related risks. The goal would be making every citizen aware, even at the grassroots, of how AI can affect them and how they can safeguard themselves.

Building transparency and trust in AI

Transparency remains a key issue. When governance moved online years ago, digital systems were praised for limiting corruption through openness. The same thinking applies to AI technologies. Without transparency, exploitation remains possible. With it, misuse can be curbed.

That’s also where public-private partnerships can play a role. Governments can make laws, but companies creating AI systems must put those laws into practice. Together, both sides can build a safer line of defense.

What lies ahead

The path is complicated, but not impossible. Strong AI governance is about three pillars—awareness, regulation, and global cooperation. Awareness to teach society how AI should and shouldn’t be used. Regulation to protect rights like privacy and security. And global cooperation so one country isn’t left fighting a worldwide challenge alone.

The dangers of AI are very real—fake news, job loss, hacking, propaganda, and cyberwars. Yet, its promise is equally strong. That balance is tricky. AI doesn’t need to be feared. It needs to be carefully ruled, because if the coin flips to its darker side without preparation, humanity may find itself on the weaker end of a powerful bargain.

Suggested Featured Image: Illustration of human and AI interaction with a balance scale showing opportunities and risks. Alt text: “Artificial Intelligence governance balancing opportunities and risks”.