Every once in a while, a technology arrives that feels genuinely refreshing. Google DeepMind’s SIMA 2 is one of those developments. Instead of being just another digital assistant or a program that follows preset instructions, this system steps directly into virtual 3D environments and behaves surprisingly close to how a real player would explore, experiment, and learn.

For anyone who has imagined having a smart in-game companion—one that listens, understands your intentions, and gets better the more it plays—SIMA 2 represents an exciting way forward.

So, what exactly is SIMA 2?

SIMA stands for Scalable Instructable Multiworld Agent. Its purpose is simple to explain but challenging to achieve: build an AI that can understand what you ask and work across many different virtual worlds without needing to be redesigned for each one.

The first version introduced earlier this year provided a glimpse of what was possible. It could follow basic instructions, but its abilities were clearly limited.

SIMA 2 is a much more capable successor.

This updated agent can:

- understand broader and more natural instructions,

- figure out what steps are needed to complete a task,

- learn directly from its experiences inside the virtual world,

- and adapt even when placed in an environment it has never encountered before.

Behind the scenes, it uses Google’s Gemini models, but SIMA 2 stands out because it applies that intelligence inside interactive 3D settings, not just through text.

How SIMA 2 works?

DeepMind trained this model by observing human players, watching countless interactions, and letting the agent practice different tasks on its own. Over time, it built a sense of how objects, movement, and goals fit together in a virtual world.

Here are the parts that make it impressive:

1. It understands natural, everyday instructions

You can talk to SIMA 2 the way you normally speak. Instructions like:

“Look around the area and see what we can use,”

“Build a small shelter before nightfall,”

“Try finding a path past those trees,”

are enough for it to understand the goal and come up with its own plan.

2. It reasons based on what it sees

SIMA 2 doesn’t rely on shortcuts. It interprets the game visually — terrain, structures, objects, movement — just as a human player would. That allows it to handle unfamiliar situations more naturally.

3. It improves through practice

Once it learns the basic mechanics, it keeps updating its skills on its own. When it passes or fails at something, it receives feedback that helps it understand what to change. Over time, its decision-making becomes better and more reliable.

4. It handles new worlds surprisingly well

DeepMind tested SIMA 2 in places it had never been trained in, including entirely AI-generated environments. Even without prior exposure, it managed to understand the layout, identify useful objects, and complete tasks — showing real adaptability.

Where SIMA 2 has been tested

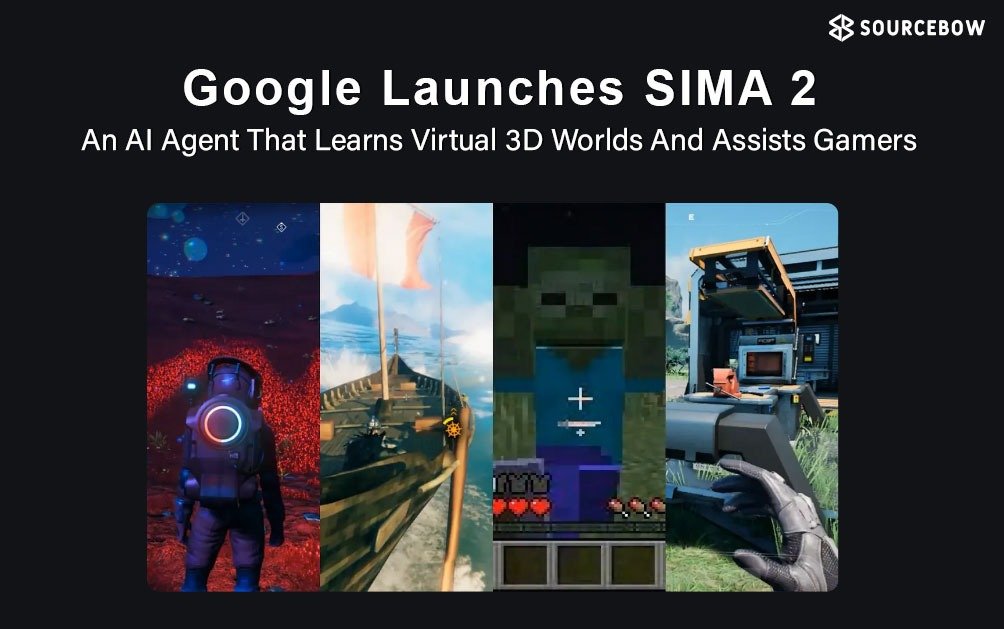

To check how well the agent performs, DeepMind used several kinds of virtual spaces, such as:

- MineDojo, a sandbox world inspired by Minecraft,

- ASKA, a survival and crafting environment,

- and new worlds created by Genie 3, which can generate unique 3D scenes from prompts.

The key takeaway is that it didn’t need specialized training for each game. It worked out the dynamics on its own, which hints at a broader understanding of how 3D environments function.

Why SIMA 2 matters for the future

While gamers will certainly benefit from smarter AI companions, the importance of this goes beyond entertainment.

1. It suggests progress toward more general AI

It shows early qualities associated with broader intelligence — planning, adapting, and learning from experience. These abilities point toward more versatile AI systems in the future.

2. It lays groundwork for robotics

Virtual learning provides a safe space for AI to develop skills before being used in real-world machines. An agent that can navigate complex digital environments may eventually help robots handle physical tasks with better understanding.

3. It opens doors to better virtual assistants

In the coming years, we might see AI partners that behave more like actual teammates, tools for game developers that test new worlds automatically, or educational environments where AI interacts with learners inside 3D simulations.

4. It supports safer AI development

Training AI in digital spaces avoids the risks that come with real-world experimentation. Systems like it can learn quickly without causing unintended harm, making the research process more responsible.

What SIMA 2 still struggles with

Despite its strengths, SIMA 2 is still an early version.

Tasks with many stages can occasionally confuse it. Very busy environments make planning harder. It still benefits from clear feedback while learning. And DeepMind is keeping it within controlled access to ensure careful, safe development.

These limitations are normal at this stage, and the progress so far already represents a meaningful step forward.

Conclusion

It isn’t just another upgrade from Google DeepMind — it’s a real step toward making AI more useful, practical, and accessible for everyday players and developers. By learning directly from complex 3D environments and understanding a wide range of game commands, it bridges the gap between traditional game assistants and a truly interactive digital companion.

It learns by exploring, trying, and adjusting — much like a person learning a new game. For players, that could mean fewer frustrating moments and more natural help. For creators, it offers a new way to test, build, and refine virtual worlds. There’s still work to be done, but it gives a clear sense that interactive AI is moving from the experimental to the genuinely useful.