Can AI Understand More Than Text?

So, here’s the deal. AI isn’t just about typing in a question and getting a wall of text back. Things have gotten way more interesting. Now, AI can actually see images, hear audio, and even make music or art.

Imagine drawing a quick sketch of a signup form, snapping a pic, and then asking AI to build the whole website for you HTML, CSS, JavaScript, all of it. Wild, right? And it totally works. The AI even picks up on little details, like if you wrote Instagram somewhere on your sketch, it’ll include that too.

But it doesn’t stop there. AI can generate music just from a description. Want a rhythmic East Coast boombap hip hop beat that’s uplifting and inspirational? Just say it, and boom there’s your track.

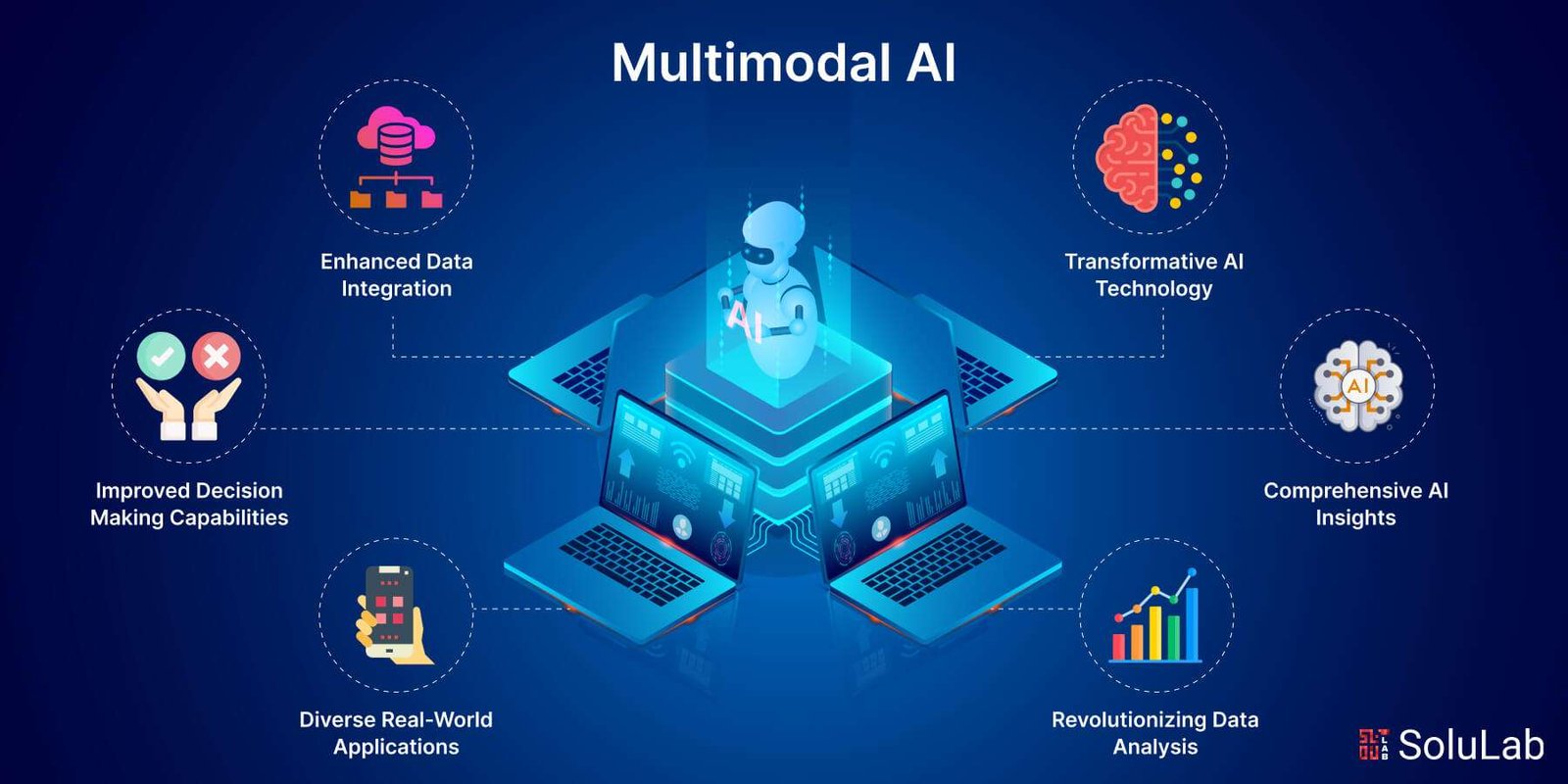

What’s Multimodal AI Anyway?

Here’s where things get really interesting. The ability for AI to handle different types of data text, images, audio, code, even video is called multimodality. It’s a big word, but it just means the AI can juggle all this stuff at once.

Think about how people learn. Kids listen, look around, touch stuff. AI is starting to do the same thing.

How Does This All Work?

Let’s break it down. Most image-generating AIs use something called diffusion models. These models start with a mess of random noise and slowly turn it into a picture.

The catch? On their own, they just make random images. But if you add a text prompt, you can steer the AI toward what you want. Like, you type a dog on a skateboard, and the AI figures out how to make that happen.

How Does AI Know What Things Mean?

This is where embedding models come in. They turn both words and pictures into numbers called vectors that capture their meaning. So the word “woman” and a photo of a woman both get turned into numbers that mean the same thing to the AI.

It’s like giving everything a secret code, and the AI learns to match them up.

Training the AI to Match Things Up

To train these models, the AI looks at tons of image-caption pairs. For each pair, it turns the image and the caption into vectors and tries to make them point in the same direction.

If the image is an apple and the caption says “apple,” the AI makes those vectors line up. If the caption says “dog” but the image is an apple, it pushes those vectors apart. This is how the AI learns what matches and what doesn’t.

What About Models That Can Do Everything?

Text-to-image models are cool, but what about AIs that can handle any kind of input and spit out any kind of output? Like, you could show it a picture, ask it a question, and get back a voice answer. That’s where things get a little trickier.

Let’s say someone asks the AI to paint a picture of a man who would commit such a crime. Does that mean draw his face, or describe his backstory? Even people might not agree on the answer. The AI has to guess what’s wanted, and sometimes it gets it right, sometimes not.

How Do These Multimodal Systems Work Together?

If it looks like the AI is doing audio-to-image or video-to-text, what’s really happening is a chain of smaller AIs working together. For example, if you ask it something by voice, first an audio-to-text model turns your words into text. Then a text AI figures out what you want. If you want an image, another model turns the idea into a picture. Text is the glue that holds it all together.

Real Life Examples That Are Actually Useful

Multimodal AI isn’t just a fancy trick. It’s already making life easier. For example, you can upload a video, an image, and some text, and the AI can find the exact moment in the video that matches your image.

Or, if you’re a developer, you can feed in a whole codebase and ask the AI to write a guide for new team members. Saves a ton of time.

Got a furniture site? Let users upload a photo of their living room, then show them art that matches their style. The AI can look at the room, look at the art options, and explain which ones fit best. It’s like having a super smart interior designer on call.

Why Does This Matter?

The ability for AI to work with all these different types of data is a game changer. It opens up new possibilities in e commerce, security, entertainment, and way more.

It’s not perfect yet. Sometimes it’s not clear what kind of answer you’ll get. But the potential is huge.

So next time you see an AI that can chat, draw, listen, and create, just remember it’s not magic. It’s multimodal AI, and it’s only getting better.