Exploring Quen 3 VL Multimodal Embeddings and How They Change AI Search

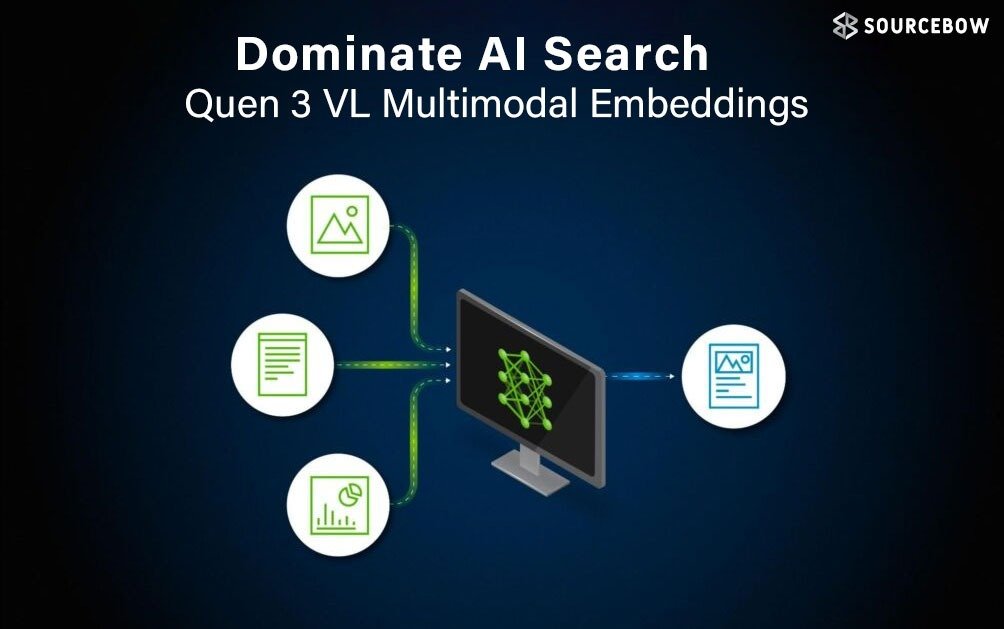

The world of AI embeddings just got a big upgrade with the new Quen 3 VL models. These aren’t your regular text-only embeddings—they are multimodal. That means they can handle text, images, and even videos. Imagine a single model that can understand a paragraph, a photo, or a clip, and connect the meaning across all of them. That’s exactly what these new embeddings bring to the table.

Understanding Embeddings and Why They Matter

Before diving into multimodal embeddings, it’s worth revisiting what embeddings actually are. Think of them as numerical snapshots of meaning. Every piece of content—whether text or image—can be turned into a vector, a list of numbers, that captures its essence. Once content is in vector form, it’s much easier to compare and find similarities.

For instance, comparing two paragraphs directly is tricky. But compare their vectors, and suddenly it’s just math—cosine similarity or other distance measures can show how close two ideas really are. This is the core of search, recommendation, and retrieval systems.

Traditionally, embeddings worked mostly for text. Images had separate approaches, and the two never really spoke the same language. That’s where multimodal embeddings step in. Now, a model can understand text, images, or videos and map them all into the same “semantic space.” A photo of a cat, a video of a cat, and the sentence “a picture of a cat” can all be recognized as similar by the model.

Why Multimodal Embeddings Are Exciting

The potential here is massive. Take PDFs, for example. Standard retrieval systems usually just extract text, leaving charts, screenshots, and diagrams untouched. Multimodal embeddings can process all these elements, making searches more meaningful.

This isn’t a totally new idea. Early CLIP models from OpenAI showed how text and image embeddings could work together, and Google’s SIGLIP further explored this space. But Quen 3 VL brings this into a flexible, practical format that can be used directly for retrieval, not just image generation.

How Embeddings and Re-rankers Work Together

Multimodal embeddings are great at recall—they can fetch a bunch of items that might match a query. But they aren’t perfect. To improve accuracy, these embeddings are paired with re-ranker models.

Think of embeddings as the “broad sweep” tool. They pull in the top candidates. Then the re-ranker goes through that smaller set and fine-tunes the results. Embeddings are fast but roughly 85% precise; re-rankers are slower but more accurate. Together, they balance speed and precision, giving better overall results.

What’s Inside Quen 3 VL

Quen 3 VL is built on Quen’s vision-language foundation models and comes in two sizes: 2B and 8B. Both models are Apache 2 licensed and available on Hugging Face.

- Text and image processing: Can handle queries, documents, photos, diagrams, and screenshots.

- Video clips: Capable of combining multiple images for short video snippets.

- Multimodal queries: Mix text and images in a single query.

- Multilingual support: Over 30 languages.

- Large context window: 32K tokens, ideal for bigger documents.

- Matrioska embeddings: Partial embeddings for faster searches without losing accuracy.

The 8B model produces 4,096-dimensional embeddings, while the 2B produces half that. With Matrioska representation learning, searches can be sped up by using only a portion of these embeddings.

Performance and Benchmarks of Quen 3 VL

Quen 3 VL has impressive performance on the Massive Multimodal Embedding Benchmark (MMEB). The 8B model ranks first, while the 2B model isn’t far behind, outperforming many larger 7B models. These results show that you don’t always need the biggest model to get strong embeddings.

Practical Uses for Multimodal Embeddings

The applications are huge. Here are a few examples:

- Visual document search: Beyond OCR, images, charts, and screenshots can be embedded for richer searches.

- E-commerce search: Upload a product photo and find similar items across stores, even in different colors.

- Video analysis: Search through hours of footage using embedded frames to locate specific moments.

Experimenting with Quen 3 VL

In practice, the models are flexible. You can encode text, images, and even combine them for hybrid searches. Similarity scores are generated to rank results, though they aren’t percentages—they’re just relative indicators.

The Matrioska embeddings also make searches faster. You can shrink the vector dimensions from 4,096 down to 1,024 or even 64 for simpler comparisons. Even at lower dimensions, the model often retrieves the top candidates correctly, just faster.

Building Retrieval Systems

A simple retrieval system can combine text and image embeddings for search. Add items with metadata, set up the index, and run queries. The system can return mixed results—text and images together—based on semantic similarity. This approach is ideal for visual question answering or searching content that’s partially textual and partially visual.

For local deployment, quantized versions of the models exist. They’re smaller and can run on personal computers, making it possible to build custom retrieval systems without relying on cloud infrastructure.

Wrapping It Up

Quen 3 VL multimodal embeddings and the re-ranker models bring a lot of power to AI search systems. They allow semantic understanding across text, images, and videos, making retrieval more accurate and flexible. Combined with Matrioska embeddings, they also keep things fast without sacrificing much precision.

From searching mixed-content documents to analyzing videos or enhancing e-commerce platforms, these models open a lot of possibilities. They make the idea of “understanding” content more human-like and much more practical.

The future of search is multimodal, and Quen 3 VL shows just how far we’ve come. If you’re working with content that’s more than just text, these models are definitely worth exploring.