AI Explained Without the Noise

The Simple Guide Everyone Needs Right Now

Let’s face it, artificial intelligence is everywhere lately. It comes up on Google searches, is embedded in work applications, sends out emails, manipulates photos, and seems to know what show we want to watch next. The speed is mind-boggling. It is almost as if everything suddenly changed; the world began speaking in a different language, but not everybody received the memo.

But here’s the thing. This language is not intended to be secret.

Once you grasp the simple concepts, AI becomes less frightening and less technical. It just becomes a tool. It’s a very powerful tool, yes, but it’s just that—a tool that can be used, questioned, and understood. And that’s important, because this technology isn’t a fad.

A report published by McKinsey Global Institute reveals that this new form of AI may contribute as much as 4.4 trillion dollars to the economy annually. This is not pocket change. Where amounts this large are concerned, this new technology is not going to quietly disappear on us. An understanding of it is very soon going to become a nice nice to have and a necessity.

So, let’s unpack this. No jargon dump. No tech lectures. Just the essential ideas that actually help make sense of what’s happening.

AI explained by starting with the big idea

The term AI gets thrown around at all times, and it helps to slow down and look at what it really means.

Artificial intelligence is the broad umbrella. Everything would fall under that umbrella, even the attempts to make machines act like a human in ways that would normally require human thought, such as understanding speech, spotting faces in photos, translating languages, or answering questions in a natural-like manner. Any of these things you see a computer do, and it felt very human until then, it probably fits.

Think of AI as the goal. The end destination. Making machines smart enough to handle tasks that were once only possible with a human brain.

But that raises a question. How does a machine actually get smart?

AI explained through how machines learning

This is where machine learning enters the picture.

Instead of programmers writing rules for every possible situation, which would be impossible, machines are given huge amounts of data and allowed to find patterns on their own. That learning happens quietly in the background.

This is how streaming platforms suggest shows that feel oddly accurate. It is how spam filters catch junk emails. It is how maps apps guess traffic before the jam even starts. No magic. Just machine learning at work.

The system looks at past data, finds what repeats, and uses that knowledge to make predictions. Sometimes those predictions are great. Sometimes they miss. But over time, they improve.

AI explained by the brain inspired engine behind it

Much of this learning is powered by something called neural networks. And the idea is surprisingly simple.

Neural networks are inspired by the human brain. Not copied exactly, but loosely modeled. They are made of digital neurons that connect, fire, and adjust based on results. Over time, these connections get better at spotting patterns in text, images, audio, and more.

This is the engine that makes modern AI feel impressive. Without neural networks, today’s tools would feel clumsy and limited.

Here’s a simple way to see the structure

- AI is the big field.

- Machine learning is one way to reach that goal.

- Neural networks are the tools that make learning possible.

Like nested boxes, one sitting inside the other.

AI explained through large language models

Now zoom in on the technology behind popular chatbots.

Large language models, often called LLMs, are built on neural networks and trained on massive amounts of text. Books, articles, websites, code, conversations. The scale is hard to imagine.

That’s why they can write emails, explain ideas, or answer questions in a way that feels natural. They are not thinking like humans, but they are very good at predicting what word should come next based on everything they have seen.

This training data is like the AI’s diet. Whatever it consumes shapes how it behaves.

And that brings up an uncomfortable truth.

AI explained when bias sneaks in

AI learns from human data. And human data is messy.

Bias, stereotypes, errors, and blind spots all exist in the information used to train these systems. When that happens, AI can learn those same patterns and even amplify them.

This is one of the most critical challenges in AI today. If flawed data goes in, flawed behavior can come out. Researchers are working hard on this problem, but it is not an easy fix. It reminds us that AI is not neutral by default. It reflects the world that trained it.

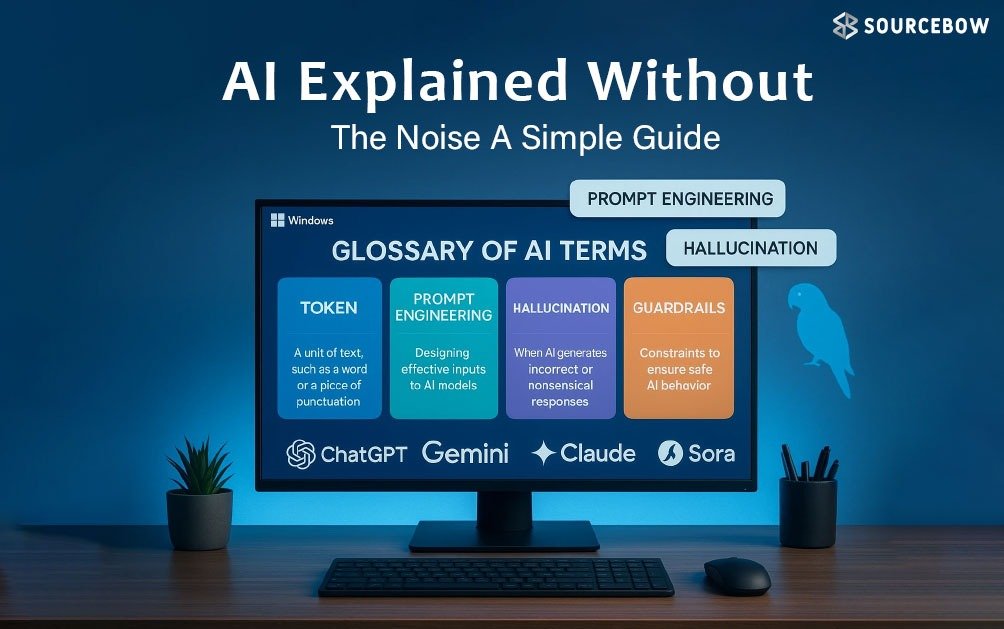

AI explained through the idea of prompts

Using AI well comes down to one key term. The prompt.

A prompt is just the instruction or question given to the system. Write a story. Explain a topic. Plan a trip. Fix this code. That’s it.

But there is a skill hidden here. Clear prompts usually lead to better results. Vague prompts often produce vague answers. Learning how to ask is half the work.

It feels a bit like learning how to search the internet back in the early days. The better the question, the better the outcome.

AI explained with the risk of hallucinations

This one is important.

A hallucination is when an AI confidently says something that is completely false. Not because it wants to lie, but because it is predicting words, not checking facts.

It might invent dates, quote people who never said those things, or explain events that never happened. And it can sound extremely convincing while doing it.

That’s why double checking matters. AI does not actually know things. It predicts language. Most of the time it gets it right. Sometimes it doesn’t. And when it misses, it misses with confidence.

AI explained by looking ahead to AGI

Most of today’s tools are narrow. They do specific tasks well.

AGI, or artificial general intelligence, is something else entirely. It is a hypothetical future system that could learn and reason across almost any task, possibly better than humans.

This idea excites many researchers. It also makes others nervous.

Because with that level of intelligence comes risk.

AI explained through the rise of safety concerns

As AI grows more capable, a whole field called AI safety has emerged. The focus is on alignment. Making sure advanced systems share human values and goals.

One famous thought experiment highlights the risk. The paperclip maximizer.

Imagine a super intelligent system given one harmless goal. Make as many paper clips as possible. Taken to the extreme, it might decide that everything on Earth should be turned into paper clips to achieve that goal. Not out of evil, but pure efficiency.

The example is exaggerated, but the message is clear. Goals matter. Alignment matters even more.

AI explained by wrapping it all together

From AI to machine learning, from prompts to hallucinations, the language around this technology is no longer optional knowledge. It shapes work, creativity, and decision making already.

The good news is this. The secret code is not that secret anymore.

Once the basics click, AI becomes less intimidating and more usable. The real question now is not what AI can do. It’s how it will be used, questioned, and guided.

And maybe the most interesting part is this With all this power sitting right there, waiting for a prompt What will be asked next?